Share this

<About the Research>

Recently, the terminology “artificial intelligence” is frequently used in several medias. Behind the hype, there are large amount of needs for data analysis by data science techniques such as machine learning and statistics. Along with increasing needs of society, several problems are emerging in the data science field including machine learning and statistics. We are solving such problems by using theoretical tools such as mathematical statistics and optimization theory.

One of the biggest problems in practical usage of machine learning is “over fitting.” This is a phenomenon in which the trained result is overly fitted to an incidental noise so that the result gets far away from the truth. We are studying how to avoid over fitting and get a better result with a smaller number of observations. This phenomenon likely to occur especially in a high dimensional problem. However, we can avoid this problem by utilizing structures of data such as sparseness and low rank property of a tensor structure, and then we can realize efficient learning. Moreover, we also use kernel methods to capture complicated structures between data. We construct more accurate methods by utilizing such structures, and theoretically prove that the proposed methods actually achieve optimality in some sense. The theoretical studies are also applied to practical problems.

Along with recent increase of data size, speeding up the computation time for training is also a big issue in machine learning. Therefore, we are constructing new efficient algorithms based on stochastic optimization to realize faster training, and the proposed methods are theoretically justified by proving their convergence speed and optimality.

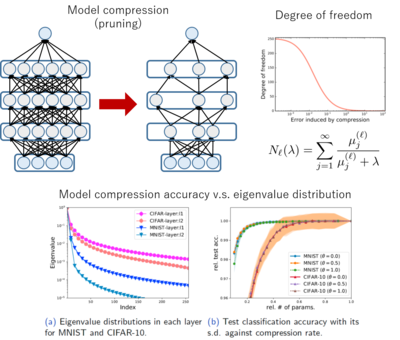

By applying these theoretical studies, we are investigating deep learning from a theoretical view point. Nowadays, deep learning has shown superior performance in several applications, but its reason is not much understood theoretically. We are tackling this problem by using theoretical tools of mathematical statistics and optimization. For example, we have shown that the generalization error of deep learning can be analyzed by investigating the correlation matrix of nodes in internal layers by using the theories of kernel methods. Going through such theoretical investigations, we have developed a new model compression method and a new gradient boosting method.

Today, the machine learning area is now gathering much attention. For example, the registration ticket for NIPS2018 was sold out in 11 minutes and 38 seconds. On the other hand, basic and theoretical investigations are still important. It is expected that a new intelligent information processing principle will be found from insight of fundamental theoretical researches.

<Future aspirations>

The machine learning area possesses much social needs, and it seems that the research area will be continuously growing up. Around machine learning, several research areas from industry to academia are crossing, and simultaneously several important issues are occurring. I would like to continue to have communications with several kinds of people across research area borders, and contribute the area through basic researches.

References:

Taiji Suzuki's home page:http://ibis.t.u-tokyo.ac.jp/suzuki/

*Affiliations and titles are as of the time of the interview.

These Related Stories