PRESS RELEASE

- Research

- 2021

SyncUp: Vision-based Practice Support for Synchronized Dancing

Authors

Zhongyi Zhou*, Anran Xu, Koji Yatani

Abstract

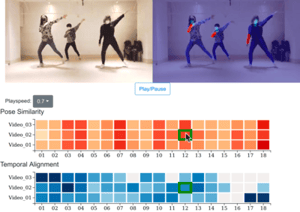

The beauty of synchronized dancing lies in the synchronization of body movements among multiple dancers. While dancers utilize camera recordings for their practice, standard video interfaces do not efficiently support their activities of identifying segments where they are not well synchronized. This thus fails to close a tight loop of an iterative practice process (i.e., capturing a practice, reviewing the video, and practicing again). We present SyncUp, a system that provides multiple interactive visualizations to support the practice of synchronized dancing and liberate users from manual inspection of recorded practice videos. By analyzing videos uploaded by users, SyncUp quantifies two aspects of synchronization in dancing: pose similarity among multiple dancers and temporal alignment of their movements. The system then highlights which body parts and which portions of the dance routine require further practice to achieve better synchronization. The results of our system evaluations show that our pose similarity estimation and temporal alignment predictions were correlated well with human ratings. Participants in our qualitative user evaluation expressed the benefits and its potential use of SyncUp, confirming that it would enable quick iterative practice.

Proceedings of the ACM on Interactive, Mobile, Wearable, and Ubiquitous : https://dl.acm.org/doi/abs/10.1145/3478120